Consensus in the Presence of Partial Synchrony

Cynthia Dwork, Nancy Lynch and Larry StockMeyer

Consensus in the Presence of Partial Synchrony CYNTHIA DWORK AND NANCY LYNCH .Massachusetts Institute of Technology, Cambridge, Massachusetts AND LARRY STOCKMEYER IBM Almaden Research Center, San Jose, California Abstract. The concept of partial synchrony in a distributed system is introduced. Partial synchrony lies between the cases of a synchronous system and an asynchronous system. In a synchronous system, there is a known fixed upper bound A on the time required for a message to be sent from one processor to another and a known fixed upper bound % on the relative speeds of different processors. In an asynchronous system no fixed upper bounds A and @ exist. In one version of partial synchrony, fixed bounds A and Cp exist, but they are not known a priori. The problem is to design protocols that work correctly in the partially synchronous system regardless of the actual values of the bounds A and Cp. In another version of partial synchrony, the bounds are known, but are only guaranteed to hold starting at some unknown time T, and protocols must be designed to work correctly regardless of when time T occurs. Fault-tolerant consensus protocols are given for various cases of partial synchrony and various fault models. Lower bounds that show in most cases that our protocols are optimal with respect to the number of faults tolerated are also given. Our consensus protocols for partially synchronous processors use new protocols for fault-tolerant “distributed clocks” that allow partially synchronous processors to reach some approximately common notion of time. Categories and Subject Descriptors: C.2.4 [Computer-Communication Networks]: Distributed Systems- distributed applications; distributed databases; network operating systems; C.4 [Computer Systems Organization]: Performance of Systems-reliability, availability, and serviceability; H.2.4 [Database Management]: Systems-distributed systems General Terms: Algorithms, Performance, Reliability, Theory, Verification Additional Key Words and Phrases: Agreement problem, Byzantine Generals problem, commit problem, consensus problem, distributed clock, distributed computing, fault tolerance, partially synchronous system A preliminary version of this paper appears in Proceedings of the 3rd ACM Symposium on Principles of Distributed Computing (Vancouver, B.C., Canada, Aug. 27-29). ACM, New York, 1984, pp. 103- 118. The work of C. Dwork was supported by a Bantrell postdoctoral Fellowship. The work of N. Lynch was supported in part by the Defense Advance Research Projects Agency under contract N00014-83-K- 0125, the National Science Foundation under grants DCR 83-02391 and MCS 83-06854, the Office of Army Research under Contract DAAG29-84-K-0058, and the Office of Naval Research under contract NOOO14-85-K-0168. Authors’ addresses: C. Dwork and L. Stockmeyer, Department K53/802, IBM Almaden Research Center, 650 Harry Road, San Jose, CA 95 120; N. Lynch, Laboratory for Computer Science, Massachu- setts Institute of Technology, 545 Technology Square, Cambridge, MA 02 139. Permission to copy without fee all or part of this material is granted provided that the copies are not made or distributed for direct commercial advantage, the ACM copyright notice and the title of the publication and its date appear, and notice is given that copying is by permission of the Association for Computing Machinery. To copy otherwise, or to republish, requires a fee and/or specific permission. 0 1988 ACM 0004-541 l/88/0400-0288 $01.50 Journal of the Association for Computing Machinery, Vol. 35, No. 2, April 1988, pp. 288-323.

Consensus in the Presence of Partial Synchrony 289 1. Introduction 1.1 BACKGROUND. The role of synchronism in distributed computing has recently received considerable attention [ 1, 4, lo]. One method of comparing two models with differing amounts or types of synchronism is to examine a specific problem in both models. Because of its fundamental role in distributed computing, the problem chosen is often that of reaching agreement. (See [8] for a survey; see also [6], [ 111, [ 121, and [ 181 for example.) One version of this problem considers a pN, which communicate by sending messages collection of N processors, pI, . . . , to one another. Initially each processor pi has a value Vi drawn from some domain Vof values, and the correct processors must all decide on the same value; moreover, if the initial values are all the same, say v, then v must be the common decision. In addition, the consensus protocol should operate correctly if some of the proces- sors are faulty, for example, if they crash (fail-stop faults), fail to send or receive messages when they should (omission faults), or send erroneous messages (Byzan- tine faults). Fix a particular type of fault. Given assumptions about the synchronism of the message system and the processors, one can characterize the model by its resiliency, the maximum number of faults that can be tolerated in any protocol in the given model. For example, it might be assumed that there is a fixed upper bound A on the time for messages to be delivered (communication is synchronous) and a fixed upper bound + on the rate at which one processor’s clock can run faster than another’s (processors are synchronous), and that these bounds are known a priori and can be “built into” the protocol. In this case N-resilient consensus protocols exist for Byzantine failures with authentication [3, 151 and, therefore, also for fail- stop and omission failures; in other words, any number of faults can be tolerated. For Byzantine faults without authentication, t-resilient consensus is possible iff Nr 3t + 1 [14, 151. Recent work has shown that the existence of both bounds A and @ is necessary to achieve any resiliency, even under the weakest type of faults. Dolev et al. [4], building on earlier work of Fischer et al. [lo], prove that if either a fixed upper bound A on message delivery time does not exist (communication is asynchronous) or a fixed upper bound 9 on relative processor speeds does not exist (processors are asynchronous), then there is no consensus protocol resilient to even one fail- stop fault. In this paper we define and study practically motivated models that lie between the completely synchronous and completely asynchronous cases. 1.2 PARTIALLY SYNCHRONOUS COMMUNICATION. We lirstconsiderthe case in which processors are completely synchronous (i.e., + = 1) and communication lies “between” synchronous and asynchronous. There are at least two natural ways in which communication might be partially synchronous. One reasonable situation could be that an upper bound A on message delivery time exists, but we do not know what it is a priori. On the one hand, the impossibility results of [4] and [lo] do not apply since communication is, in fact, synchronous. On the other hand, participating processors in the known consensus protocols need to know A in order to know how long to wait during each round of message exchange. Of course, it is possible to pick some arbitrary A to use in designing the protocol, and say that, whenever a message takes longer than this A, then either the sender or the receiver is considered to be faulty. This is not an acceptable solution to the problem since, if we picked A too small, all the processors

290 C. DWORK ET AL. could soon be considered faulty, and by definition the decisions of faulty processors do not have to be consistent with the decision of any other processor. What we would like is a protocol that does not have A “built in.” Such a protocol would operate correctly whenever it is executed in a system where some fixed upper bound A exists. It should also be mentioned that we do not assume any probability distribution on message transmission time that would allow A to be estimated by doing experiments. Another situation could be that we know A, but the message system is sometimes unreliable, delivering messages late or not at all. As noted above, we do not want to consider a late or lost message as a processor fault. However, without any further constraint on the message system, this “unreliable” message system is at least as bad as a completely asynchronous one, and the impossibility results of [4] apply. Therefore, we impose an additional constraint: For each execution there is a global stabilization time (GST), unknown to the processors, such that the message system respects the upper bound A from time GST onward. This constraint might at first seem too strong: In realistic situations, the upper bound cannot reasonably be expected to hold forever after GST, but perhaps only for a limited time. However, any good solution to the consensus problem in this model would have an upper bound L on the amount of time after GST required for consensus to be reached; in this case it is not really necessary that the bound A hold forever after time GST, but only up to time GST + L. We find it technically convenient to avoid explicit mention of the interval length L in the model, but will instead present the appropriate upper bounds on time for each of our algorithms. Instead of requiring that the consensus problem be solvable in the GST model, we might think of separating the correctness conditions into safety and termination properties. The safety conditions are that no two correct processors should ever reach disagreement, and that no correct processor should ever make a decision that is contrary to the specified validity conditions. The termination property is just that each correct processor should eventually make a decision. Then we might require an algorithm to satisfy the safety conditions no matter how asynchronously the message system behaves, that is, even if A does not hold eventually. On the other hand, we might only require termination in case A holds eventually. It is easy to see that these safety and termination conditions are equivalent to our GST condition: If an algorithm solves the consensus problem when A holds from time GST onward, then that algorithm cannot possibly violate a safety property even if the message system is completely asynchronous. This is because safety violations must occur at some finite point in time, and there would be some continuation of the violating execution in which A eventually holds. Thus, the condition that A holds from some time GST onward provides a second reasonable definition for partial communication synchrony. Once again, it is not clear how we could apply previously known consensus protocols to this model. For example, the same argument as for the case of the unknown bound shows that we cannot treat lost or delayed messages in the same way as processor faults. For succinctness, we say that communication is partially synchronous if one of these two situations holds: A exists but is not known, or A is known and has to hold from some unknown point on. Our results determine precisely, for four interesting fault models, the maxi- mum resiliency possible in cases where communication is partially synchronous. For fail-stop or omission faults we show that t-resilient consensus is possible iff N 2 2t + 1. For Byzantine faults with authentication, we show that t-resilient consensus is possible iff N 2 3t + 1. Also, for Byzantine faults without authenti-

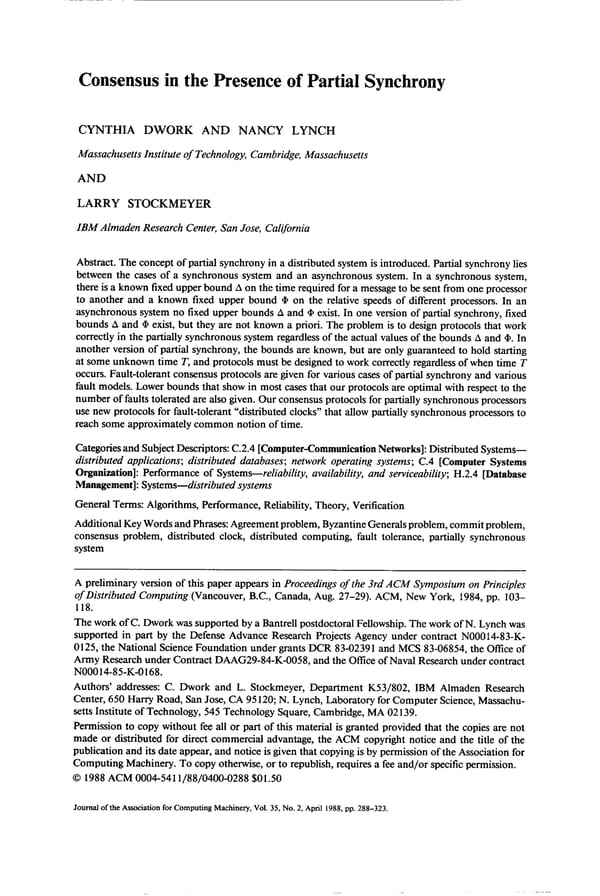

Consensus in the Presence of Partial Synchrony 291 TABLE I. SMALLEST NUMBER OF PROCESSORS Nmi. FOR WHICH A I-RimxiT CONSENSUS PROTOCOL EXISTS Partially syn- Partially syn- Partially syn- chronous pro- chronous com- chronous cessors and munication and communica- synchronous Failure type Syn- Asyn- synchronous tion and pro- communica- chronous chronous processors cessors tion Fail-stop t Ca 2t-k 1 2t+ 1 t Omission t m 2t+ 1 2t+ 1 [2t, 2t + l] Authenticated Byzantine t m 3t+ 1 3t+ 1 2t+ 1 Byzantine 3t+1 m 3t+ 1 3t+ 1 3t+ 1 cation, we show that t-resilient consensus is possible iff N 2 3t + 1. (The “only if” direction in this case follows immediately from the result for the completely synchronous case in [ 151.) For all four types of faults, the time required for all correct processors to reach consensus is (1) a polynomial in N and A, for the model in which A is unknown; and (2) GST plus a polynomial in N and A, for the GST model. All of our protocols that reach consensus within time polynomial in parameters such as iV, A, and GST also have the property that the total number of message bits sent is also bounded above by a polynomial in the same parameters. Table I shows the maximum resiliency in various cases and compares our results with previous work. The results where communication is partially synchronous and processors are synchronous are shown in column 3 of the table; the results in columns 4 and 5 will be explained shortly. In each case, the table gives Nmin, the smallest value of N (N L 2) for which there is a t-resilient protocol (t > 1). (Some of the lower bounds on Nmin in the last column of the table have slightly stronger constraints on t and N, which are given in the formal statements of the theorems.) Results in the synchronous column are due to [3], [5], and [ 151, and those in the asynchronous column are due to [4] and [lo]. The table entry that is the closed interval [2t, 2t + I] means that 2t 5 iVmin 5 2t + 1. It is interesting to note that, for fail-stop, omission, and Byzantine faults with authentication, the maximum resiliency for partially synchronous communication lies strictly between the maximum resiliency for the synchronous and asynchronous cases. It is also interesting to note that, for partially synchronous communication, authentication does not improve resiliency. Our protocols use variations on a common method: A processor p tries to get other processors to change to some value v that p has found to be “acceptable”; p decides v if it receives sufficiently many acknowledgments from others that they have changed their value to v, so that a value different from v will never be found acceptable at a later time. Similar methods have already appeared in the literature (e.g., see [2], [ 191). Reischuk [ 171 and Pinter [ 161 have also obtained consensus results that treat message and processor faults separately. 1.3 PARTIALLY SYNCHRONOUS COMMUNICATION AND PROCESSORS. It is easy to extend the models described in Section 1.2 to allow processors, as well as com- munication, to be partially synchronous. That is, + (the upper bound on relative processor speed) can exist but be unknown, or 9 can be known but actually hold only from some time GST onward. We obtain results that completely characterize the resiliency in cases in which both communication and processors are par- tially synchronous, for all four classes of faults. In such cases we assume that

292 C. DWORK ET AL. communication and processors possess the same type of partial synchrony; that is, either both @ and A are unknown, or both hold from some time GST on. Surprisingly, the bounds we obtain are exactly the same as for the case in which communication alone is partially synchronous; see column 4 of Table I. (The only difference is that in this case the polynomial bounds on time depend on N, A, and a.) In the earlier case the fact that @ was equal to 1 implied that each processor could maintain a local time that was guaranteed to be perfectly synchronized with the local times of other processors. In this case no such notion of time is available. We give two new protocols allowing processors to simulate distributed clocks. (These are fault-tolerant variations on the clock used by Lamport in [ 131.) One uses 2t + 1 processors and tolerates t fail-stop, omission, or authenticated Byzantine faults, while the other uses 3t + 1 processors and tolerates t unauthenticated Byzantine faults. When the appropriate clock is combined with each of our protocols for the case where only communication is partially synchronous, the result is a new protocol for the case in which both communication and processors are partially synchronous. 1.4 PARTIALLY SYNCHRONOUS PROCESSORS. In analogy to our treatment of partial communication synchrony, it is easy to define models where processors are partially synchronous and communication is synchronous (A exists and is known a priori). The last column of Table I summarizes our results for this case. Once again, time is polynomial (this time in N, A and @). The basic strategy used in constructing the protocols for this case also involves combining a consensus protocol that assumes processor synchrony with a distributed clock protocol. For fail-stop faults and Byzantine faults with authentication, either the distributed clock or the consensus protocol can tolerate more failures than the corresponding clock or consensus protocol used for the case in which both communication and processors are partially synchronous, so we obtain better resiliencies. Technical Remarks (1) Our protocols assume that an atomic step of a processor is either to receive messages or to send a message to a single processor, but not both; there is neither an atomic receive/send operation nor an atomic broadcast operation. We adopt this rather weak definition of a processor’s atomic step in this paper because it is realistic in practice and seems consistent with assumptions made in much of the previous work on distributed agreement. However, our lower bound arguments are still valid if a processor can receive messages and broadcast a message to all processors in a single atomic step. (2) The strong unanimity condition requires that, if all initial values are the same, say v, then v must be the common decision. Weak unanimity requires this condition to hold only if no processor is faulty. Unless noted otherwise, our consensus protocols achieve strong unanimity, and our lower bounds hold even for weak unanimity. In the case, however, of Byzantine faults with authentication and partially synchronous processors, the upper bound 2t + 1 in the last column of Table I holds for strong unanimity only if the initial values are signed by a distinguished “sender.” This assumption is also used in the algorithm of [3] for the completely synchronous case. (For weak unanimity, the upper bound 2t + 1 in the last column holds even without signed initial values.) We discuss this further in Section 6, which is the first place where the issue of whether the initial values are signed has any effect on our results. (3) Our consensus protocols are designed for an arbitrary value domain V, whereas our lower bounds hold even for the case 1 Vl = 2.

Consensus in the Presence of Partial Synchrony 293 The remainder of this paper is organized as follows: Section 2 contains delini- tions. Section 3 contains our basic protocols, presented in a basic round model, which has more power than the models in which we are really interested. Section 4 contains our results for the model in which processors are synchronous and communication is partially synchronous. In particular, the protocols of Sec- tion 3 are adapted to this model. The distributed clocks are defined in Section 5, where we also discuss how to combine the results of Section 3 with the clocks to produce protocols for the model in which both processors and communication are partially synchronous. Section 6 contains our results for the case in which processors are partially synchronous and communication is synchronous. 2. Definitions 2.1 MODEL OF COMPUTATION. Our formal model of computation is based on the models of [4] and [lo]. Here we review the basic features of the model informally. The communication system is modeled as a collection of N sets of messages, called bu@s, one for each processor. The buffer ofpi represents messages that have been sent to pi, but not yet received. Each processor follows a deterministic protocol involving the receipt and sending of messages. Each processor pi can perform one of the following instructions in each step of its protocol: Send(m, pj): places message m in p:s buffer; Receive(pi): removes some (possibly empty) set S of messages from p;s buffer and delivers the messages to pi. In the Send(m, pi) instruction, pj can be any processor; that is, the communication network is completely connected. A processor’s protocol is specified by a state transition diagram; the number of states can be infinite. The instruction to be executed next depends on the current state, and the execution causes a state transition. For a Send instruction, the next state depends only on the current state, whereas, for a Receive instruction, the next state depends also on the set S of delivered messages. The initial state of a processor pi is determined by its initial value vi in V. At some point in its computation, a processor can irreversibly decide on a value in V. For subsequent definitions, it is useful to imagine that there is a real-time clock outside the system that measures time in discrete integer-numbered steps. At each tick of real time, some processors take one step of their protocols. A run of the system is described by specifying the initial states for all processors and by specifying, for each real-time step, (1) which processors take steps, (2) the instruction that each processor executes, and (3) for each Receive instruction, the set of messages delivered. Runs can be finite or infinite. Given an infinite run R, the message m is lost in run R if m is sent by some Send(m, pj), pj executes infinitely many Receive instructions in R, and m is never delivered by any Receive(pj). 2.2 FAILURES. A processor executes correctly if it always performs instructions of its protocol (transition diagram) correctly. A processor is correct if it executes correctly and takes infinitely many steps in any infinite run. We consider four types of increasingly destructive faulty behavior of processor pi: Fail-stop: Processor pi executes correctly, but can stop at any time. Once stopped it cannot restart.

294 C. DWORK ET AL. Omission: Faulty processor pi follows its protocol correctly, but Send(m, pj), when executed by pi, might not place m in pj’s buffer and Receive(pi) might cause only a subset of the delivered messages to be actually received by pi. In other words, an omission fault on reception occurs when some set S of messages is delivered to pi and all messages in S are removed from pi’s buffer, but pi follows a state transition as though some (possibly empty) subset S’ of S were delivered. Authenticated Byzantine: Arbitrary behavior, but messages can be signed with the name of the sending processor in such a way that this signature cannot be forged by any other processor. Byzantine: Arbitrary behavior and no mechanism for signatures, but we assume that the receiver of a message knows the identity of the sender. 2.3 PARTIAL SYNCHRONY. Let I be an interval of real time and R be a run. We say that the communication bound A holds in Ifor run R provided that, if message m is placed in pj’s buffer by some Send(m, pj) at a time s1 in I, and if pj executes a Receive(pj) at a time s2 in I with s2 2 s1 + A, then m must be delivered to pj at time s2 or earlier. This says intuitively that A is an upper bound on message transmission time in the interval I. The processor bound @ holds in Ifor R provided that, in any contiguous subinterval of I containing @ real-time steps, every correct processor must take at least one step. This implies that no correct processor can run more than @ times slower than another in the interval I. The following conditions, which define varying degrees of communication synchrony, place constraints on the kinds of runs that are allowed. In these definitions, A denotes some particular positive integer: (1) A is known: The communication bound A holds in [ 1, 00) for every run R. Delta is known: A is known for some fixed A. This is the usual definition of synchronous communication. (2) Delta is unknown: For every run R, there is a A that holds in [ 1, m). (3) A holds eventually: For every run R, there is a time T such that A holds in [T, m). Such a time T is called the Global Stabilization Time (GST). Delta holds eventually: A holds eventually for some fixed A. If either (2) or (3) holds, we say that communication is partially synchronous. It is helpful to view each situation as a game between a protocol designer and an adversary. If delta is known, the adversary names an integer A, and the protocol designer must supply a consensus protocol that is correct if A always holds. If delta is unknown, the protocol designer supplies the consensus protocol first, then the adversary names a A, and the protocol must be correct if that A always holds. If delta holds eventually, the adversary picks A, the designer (knowing A) supplies a consensus protocol, and the adversary picks a time T when A must start holding. By replacing A by @ and “delta” by “phi” above, (1) defines synchronous processors, and (2) and (3) define two types of partially synchronous processors. 2.4 CORRECTNESS OF A CONSENSUS PROTOCOL. Given assumptions A about processor and communication synchrony, a fault type F, and a number N of processors and an integer t with 0 5 t I N, correctness of a t-resilient consensus protocol is defined as follows: For any set C containing at least N - t processors and any run R satisfying A and in which the processors in C are correct and the behavior of the processors not

Consensus in the Presence of Partial Synchrony 295 in C is allowed by the fault type F, the protocol achieves: -Consistency. No two different processors in C decide differently. -Termination. If R is infinite, then every processor in C makes a decision. -Unanimity. There are two types: Strong unanimity: If all initial values are v and if any processor in C decides, then it decides v. Weak unanimity: If all initial values are v, if C contains all processors, and if any processor decides, then it decides v. In models where messages cannot be lost, such as the models where delta is unknown, our protocols can be easily modified so that all correct processors can halt soon after sufficiently many correct processors have decided. However, we do not require halting explicitly in the termination condition because, as can be easily shown, if messages can be lost before GST in the model where delta holds eventually and if the protocol is l-resilient to fail-stop faults, then there is some execution in which some correct processor does not halt. Further discussion of the issue of halting is given in Section 4.2, Remark 2, after the protocols have been described. 3. The Basic Round Model In this section we define the basic round model and present preliminary versions of our algorithms in this model. In the following sections we show how each of our models can simulate the basic model. 3.1 DEFINITION OF THE MODEL. In the basic round model, processing is divided into synchronous rounds of message exchange. Each round consists of a Send subround, a Receive subround, and a computation subround. In a Send subround, each processor sends messages to any subset of the processors. In a Receive subround, some subset of the messages sent to the processor during the correspond- ing Send subround is delivered. In a computation subround, each processor executes a state transition based on the set of messages just received. Not all messages that are sent need arrive; some can be lost. However, we assume that there is some round GST, such that all messages sent from correct processors to correct processors at round GST or afterward are delivered during the round at which they were sent. As explained in the Introduction, loss of a message before GST does not necessarily make the sender or the receiver faulty. Although all processors have a common numbering for the rounds, they do not know when round GST occurs. The various kinds of faults are defined for the basic model as for the earlier models. 3.2 PROTOCOLS IN THE BASIC ROUND MODEL. In the remainder of this section, we show how the consensus problem can be solved for the basic model, for each of the fault types. To argue that our protocols achieve strong unanimity, we use the notion of a proper value defined as follows: If all processors start with the same value v, then v is the only proper value; if there are at least two different initial values, then all values in Vare proper. In all protocols, each processor will maintain a local variable PROPER, which contains a set of values that the processor knows to be proper. Processors will always piggyback their current PROPER sets on all messages. The way of updating the PROPER sets will vary from algorithm to

296 C. DWORK ET AI,. algorithm. If only weak unanimity is desired, the PROPER sets are not needed, and the protocols can be simplified somewhat; we leave these simplifications to the interested reader. 3.2.1 Fail-Stop and Omission Faults. The first algorithm is used for either fail-stop or omission faults. It achieves strong unanimity for an arbitrary value domain V. Algorithm 1. N 2 2t + 1 Initially, each processor’s set PROPER contains just its own initial value. Each processor attaches its current value of PROPER to every message that it sends. Whenever a processor p receives a PROPER set from another processor that contains a particular value v, then p puts v into its own PROPER set. It is easy to check that each PROPER set always contains only proper values. The rounds are organized into alternating trying and lock-release phases, where each trying phase consists of three rounds and each lock-release phase consists of one round. Each pair of corresponding phases is assigned an integer, starting with 1. We say that phase h belongs to processor pi if h = i(mod N). At various times during the algorithm, a processor may lock a value v. A phase number is associated with every lock. If p locks v with associated phase number k = i mod N, it means that p thinks that processor pi might decide v at phase k. Processor p only releases a lock if it learns its supposition was false. A value v is acceptable to p if p does not have a lock on any value except possibly v. Initially, no value is locked. We now describe the processing during a particular trying phase k. Let s = 4k - 3 be the number of the first round in phase k, and assume k = i mod N. At round s each processor (including pi) sends a list of all its acceptable values that are also in its proper set to processor pi (in the form of a (list, k) message). (If V is very large, it is more efficient to send a list of proper values and a list of unacceptable values. Given these lists, the proper acceptable values are easily deduced.) Just after round s, that is, during the computation subround between rounds s and s + 1, processor pi attempts to choose a value to propose. In order for processor pi to propose v, it must have heard that at least N - t processors (possibly including itself) find value v acceptable and proper at the beginning of phase k. There might be more than one possible value that processor pi might propose; in this case processor pi will choose one arbitrarily. Processor pi then broadcasts a message (lock v, k) at round s + 1. If any processor receives a (lock v, k) message at round s + 1, it locks v, associating the phase number k with the lock, and sends an acknowledgment to processor pi (in the form of an (ack, k) message), at round s + 2. In this case any earlier lock on v is released. (Any locks on other values are not released at this time.) If processor pi receives acknowledgments from at least t + 1 processors at round s + 2, then processor pi decides v. After deciding v, processor pi continues to participate in the algorithm. Lock-release phase k occurs at round s + 3 = 4k. At round s + 3, each processor p broadcasts the message (v, h) for all v and h such that p has a lock on v with associated phase h. If any processor has a lock on some value v with associated phase h, and receives a message (w, h ‘) with w # v and h ’ 2 h, then the processor releases its lock on v. LEMMA 3.1. It is impossible for two distinct values to acquire locks with the same associated phase.

Consensus in the Presence of Partial Synchrony 297 PROOF. In order for two values v and w to acquire a lock at trying phase k, the processor to which phase k belongs must send conflicting (lock v, k) and (lock w, k) messages, which it will never do in this fault model. Cl LEMMA 3.2. Suppose that some processor decides v at phase k, and k is the smallest numbered phase at which a decision is made. Then at least t + 1 processors lock v at phase k. Moreover, each of the processors that locks v at phase k will, from that time onward, always have a lock on v with associated phase number at least k. PROOF. It is clear that at least t + 1 processors lock v at phase k. Assume that the second conclusion is false. Then let 1 be the first phase at which one of the locks on v set at phase k is released without immediately being replaced by another, higher numbered lock on v. In this case the lock is released during lock-release phase 1, when it is learned that some processor has a lock on some w # v with associated phase h, where k 5 h I 1. Lemma 3.1 implies that no processor has a lock on any w # v with associated phase k. Therefore, some processor has a lock on w with associated phase h, where k < h 5 1. Thus, it must be that w is found acceptable to at least N - t processors at the first round of some phase numbered h, k c h 5 1, which means that at least N - t processors do not have v locked at the beginning of that phase. Since t + 1 processors have v locked at least through the first round of 1, this is impossible. Cl LEMMA 3.3. Immediately after any lock-release phase that occurs at or after GST, the set of values locked by correct processors contains at most one value. PROOF. Straightforward from the lock-release rule. 0 THEOREM 3.1. Assume the basic model with fail-stop or omission faults. Assume N 2 2t f 1. Then Algorithm 1 achieves consistency, strong unanimity, and termination for an arbitrary value domain. PROOF. First, we show consistency. Suppose that some correct processor pi decides v at phase k, and this is the smallest numbered phase at which a decision is made. Then Lemma 3.2 implies that, at all times after phase k, at least t + 1 processors have v locked. Consequently, at no later phase can any value other than v ever be acceptable to N - t processors, so no processor will ever decide any value other than v. Next, we argue strong unanimity. If all the initial values are v, then v is the only value that is ever in the PROPER set of any processor. Thus, v is the only possible decision value. Finally, we argue termination. Consider any trying phase k belonging to a correct processor pi that is executed after a lock-release phase, both occurring at or after round GST. We claim that processor pi will reach a decision at trying phase k (if it has not done so already). By Lemma 3.3, there is at most one value locked by correct processors at the start of trying phase k. If there is such a locked value, v, then sufficient communication has occurred by the beginning of trying phase k so that v is in the PROPER set of each correct processor. Moreover, any initial value of a correct processor is in the PROPER set of each correct processor at the beginning of trying phase k. Since there are at least N - t 2 t + 1 correct processors, it follows that a proper, acceptable value will be found for processor pi to propose, and that the proposed value will be decided on by processor pi at trying phase k. Cl

298 C. DWORK ET AL. It is easy to see that all correct processors make decisions by round GST + 4(N + 1). 3.2.2 Byzantine Faults with Authentication. The second algorithm achieves strong unanimity for an arbitrary value set I’, in the case of Byzantine faults with authentication. Algorithm 2. N 2 3t + 1 Initially, each processor’s PROPER set contains just its own initial value. Each processor attaches its PROPER set and its initial value to every message it sends. If a processor p ever receives 2t + 1 initial values from different processors, among which there are not t + 1 with the same value, then p puts all of V (the total value domain) into its PROPER set. (Of course, p would actually just set a bit indicating that PROPER contains all of V.) When a processor p receives claims from at least t + 1 other processors that a particular value v is in their PROPER sets, then p puts v into its own PROPER set. It is not difficult to check that each PROPER set for a correct processor always contains only proper values. Processing is again divided into alternating trying and lock-release phases, with phases numbered as before and of the same length as before. At various times during the algorithm, processors may lock values. In Algorithm 2, not only is a phase number associated with every lock, but also a proof of acceptability of the locked value, in the form of a set of signed messages, sent by N - t processors, saying that the locked value is acceptable and in their PROPER sets at the beginning of the given phase. A value v is acceptable to p if p does not have a lock on any value except possibly v. We now describe the processing during a particular trying phase k. Let s = 4k - 3 be the first round of phase k, and assume k = i mod N. At round s, each processor pj (including pi) sends a list of all its acceptable values that are also in its PROPER set to processor pi, in the form Ej(list, k), where Ej is an authentication function. Just after round S, processor pi attempts to choose a value to propose. In order for processor pi to propose v, it must have heard that at least N - t processors find value v acceptable and proper at phase k. Again, if there is more than one possible value that processor pi might propose, then it will choose one arbitrarily. Processor pi then broadcasts a message Ei(lock v, k, proof), where the proof consists of the set of signed messages Ej(list, k) received from the N - t processors that found v acceptable and proper. If any processor receives an Ei(lock v, k, proof) message at round s + 1, it decodes the proof to check that N - t processors find v acceptable and proper at phase k. If the proof is valid, it locks v, associating the phase number k and the message Ej(lock v, k, proof) with the lock, and sends an acknowledgment to processor pi. In this case any earlier lock on v is released. (Any locks on other values are not released at this time.) If the processor should receive such messages for more than one value v, it handles each one similarly. The entire message Ei(lOck v, k, proof) is said to be a valid lock on v at phase k. If processor pi receives acknowledgments from at least 2t + 1 processors, then processor pi decides v. After deciding v, processor pi continues to participate in the algorithm. Lock-release phase k occurs at round s + 3 = 4k. Processors broadcast messages of the form Ei(lock v, h, proof), indicating that the sender has a lock on v with associated phase h and the given associated proof, and that processor pi sent the message at phase h, which caused the lock to be placed. If any processor has a lock on some value v with associated phase h and receives a properly signed message

Consensus in the Presence of Partial Synchrony 299 E’(lock w, h ‘, proof’) with w # v and h ’ 2 h, then the processor releases its lock on v. LEMMA 3.4. It is impossible for two distinct values to acquire valid locks at the same trying phase if that phase belongs to a correct processor. PROOF. In order for different values v and w to acquire valid locks at trying phase k, the processor pi to which phase k belongs must send conflicting Ei(lOck v, k, proof) and Ei(lock w, k, proof’) messages, which correct processors can never do. 0 LEMMA 3.5. Suppose that some correct processor decides v at phase k, and k is the smallest numbered phase at which a decision is made by a correct processor. Then at least t + 1 correct processors lock v at phase k. Moreover, each of the correct processors that locks v at phase k will, from that time onward, always have a lock on v with associated phase number at least k. PROOF. Since at least 2t + 1 processors send an acknowledgment that they locked v at phase k, it is clear that at least t + 1 correct processors lock v at phase k. Assuming that the second conclusion is false, the remaining proof by contradic- tion is identical to the proof of Lemma 3.2. Cl LEMMA 3.6. Immediately after any lock-release phase that occurs at or after GST, the set of values locked by correct processors contains at most one value. PROOF. Straightforward from the lock-release rule. Cl THEOREM 3.2. Assume the basic model with Byzantine faults and authentica- tion. Assume N r 3t + 1. Then Algorithm 2 achieves consistency, strong unanimity, and termination for an arbitrary value domain. PROOF. The proofs of consistency and strong unanimity are as in the proof of Theorem 3.1. To argue termination, consider any trying phase k belonging to a correct processor pi that is executed after a lock-release phase, both occurring at or after GST. We claim that processor pi will reach a decision at trying phase k (if it has not done so already). By Lemma 3.6, there is at most one value locked by correct processors at the start of trying phase k. If there is such a locked value v, then v was found to be proper to at least N - t processors, of which N - 2t L t + 1 must be correct. Therefore, by the beginning of trying phase k, these t + 1 correct processors have communicated to all correct processors that v is proper, so by the way the set PROPER is augmented every correct processor will have v in its PROPER set by the beginning of trying phase k. Next, consider the case in which no value is locked at the beginning of trying phase k (so all values are acceptable). If there are at least t + 1 correct processors with the same initial value v, then v is in the PROPER set of each correct processor at the beginning of trying phase k. On the other hand, if this is not the case, then all values in the value set are in the PROPER set of all correct processors at the beginning of trying phase k. It follows that a proper, acceptable value will be found for processor pi to propose, and that the proposed value will be decided on by processor pi at trying phase k. 0 As in the previous case, GST + 4(N + 1) is an upper bound on the number of rounds required for all the correct processors to reach decisions. 3.2.3 Byzantine Faults without Authentication. In this section we modify Al- gorithm 2 to handle Byzantine faults without authentication, while maintaining the same requirement, N 2 3 t + 1, on the number of processors and maintaining

300 C. DWORK ET AL. polynomial time complexity and polynomial message lengths. The modification is done by using a minor variation of a broadcast primitive, introduced by Srikanth and Toueg [20], which simulates the crucial properties of authentication. We first state these properties, then give the broadcast primitive, and finally describe the new agreement protocol. The broadcast primitive (and hence the agreement algorithm that uses the broadcast primitive) is defined in terms of superrounds, where each superround consists of two normal Send-Receive rounds. Superround GST occurs at the earliest super-round, when both of its Send-Receive rounds occur at or after round GST. The primitive gives an algorithm for a processor p to BROADCAST a message m at superround k and also gives conditions under which a processor will accept a message m from p (which is not to be confused with our definition of an “acceptable value”). The crucial properties of broadcasting that are used in the (authenticated) Algorithm 2 are as follows: (1) Correctness. If a correct processor p BROADCASTS m in superround k L GST, then every correct processor accepts m from p in superround k. (2) Unforgeubility. If a correct processor p does not BROADCAST m, then no correct processor ever accepts m from p. (3) Relay. If a correct processor accepts m from p in superround r, then every other correct processor accepts m from p in superround max(r + 1, GST) or earlier. The description of the BROADCAST primitive is given in Figure 1. The proof that the primitive has the Correctness and Unforgeability properties is identical to the proof of Srikanth and Toueg [20]. We give the proof of the Relay property since it is slightly different than in [20]. Say the correct processor q accepts m from p in superround Y. Therefore, q must have received (echo, p, m, k) from at least N - t processors by the end of the second round of superround r, so at least N - 2t correct processors sent (echo, p, m, k). By definition of BROADCAST, if a correct processor sends (echo, p, m, k) at some round h, it continues to send (echo, p, m, k) at all rounds after h. Therefore, every correct processor will receive (echo, p, m, k) from at least N - 2t processors by the end of the first round of superround max(r + 1, GST). Hence, every correct processor will send (echo, p, m, k) at the second round of max(r + 1, GST). So every correct processor will receive N - t (echo, p, m, k) messages by superround max(r + 1, GST) or earlier, and will accept m from p. The only difference between the protocol of Figure 1 and that of Srikanth and Toueg [20] is in the relaying of an (echo, p, m, k) message after N - 2t (echo, p, m, k) messages have been received. In our case, the echo message continues to be sent at every round after the N - 2t echoes are received, whereas in [20] the echo is sent only once. Since messages can be lost before GST in our model, the resending seems to be needed to get the Relay property. Although resending the echoes makes message length grow proportionally to the round number (since a new invocation of BROADCAST could be started at each round), message length is still polynomial in N and GST. In models where messages cannot be lost, such as the unknown delta model, each (echo, p, m, k) need be sent only once by each correct processor, resulting in shorter messages. Next follows the new algorithm for the unauthenticated Byzantine case in the basic model. It is patterned after the authenticated algorithm. In particular, hand- ling of PROPER sets is done exactly as in Algorithm 2.

Consensus in the Presence of Partial Synchrony 301 BROADCAST of m by p at superround k: N 2 3t + 1 Superround k: First round: p sends (init, p, M, k) to all; Second round: Each processor executes the following for any message m: if received (init, p, m, k) from p in the first round and received only one init message from p in the first round, then send (echo, p, m, k) to a& if received (echo, p, m, k) from at least N - t distinct processors in this round, then accept m from p; All subsequent rounds: Each processor executes the following for any message m: if received (echo, p, m, k) from at least N - 2t distinct processors in previous rounds, then send (echo, p, m, k) to all; if received (echo, p, m, k) from at least N - t distinct processors in this or previous rounds, then accept m from p. FIG. 1. The BROADCAST primitive. Algorithm 3. N L 3t + 1 Processing is again divided into trying and lock-release phases, with phases numbered as before. Each trying phase takes three superrounds, that is, six ordinary rounds. Lock-release phase k is done during the third superround of trying phase k. As before, a value v is acceptable to p if p does not have a lock on any value except possibly v. We now describe the processing during a particular trying phase k. Let s = 3k - 2 be the first superround of phase k, and assume k = i mod N. At superround s, each processor pj (including pi) BROADCASTS a list of all its acceptable values that are also in its PROPER set in the form (list, k). Just after superround s, processor pi attempts to choose a value to propose. In order for processor pi to propose v, it must have accepted messages from at least N - t processors stating that they find value v acceptable and proper at phase k. Again, if there is more than one possible value that processor pi might propose, then it will choose one arbitrarily. Processor pi then BROADCASTS a message (lock v, k) during super- rounds+ 1. If any processor q has by superround s + 1 accepted a message (lock v, k) from pi and also accepted messages (list, k) from N - t processors stating that they find v acceptable and proper at the first superround of phase k, then q locks v, associating the phase number k with the lock, and sends an acknowledgment (ack, k) to processor pi. In this case any earlier lock on v is released. (Any locks on other values are not released at this time.) If the processor should receive such messages for more than one value v, it handles each one similarly. We say that q accepts a valid lock on v with phase k if it has accepted a message (lock v, k) from pi and accepted N - t messages (list, k) as just described. These messages do not all have to be accepted at the same round. If processor pi receives acknowledgments from at least 2t + 1 processors, then processor pi decides v. After deciding v, processor pi continues to participate in the algorithm. Lock-release phase k occurs at the end of the third superround of phase k. In this algorithm the lock-release phase does not send any messages. If a processor q has a lock on some value v with associated phase h and q has accepted, at this

302 C. DWORK ET AL. round or earlier, a valid lock on w with associated phase h ‘, and if w # v and h ’ 2 h, then q releases its lock on v. LEMMA 3.7. It is impossible for correct processors to accept valid locks on two distinct values with associated phase k tfphase k belongs to a correct processor. PROOF. Suppose that the lemma is false. By the Unforgeability property, the processor pi to which phase k belongs must BROADCAST conflicting (lock v, k) and (lock w, k) messages, which correct processors can never do. Cl LEMMA 3.8. Suppose that some correct processor decides v at phase k, and k is the smallest numbered phase at which a decision is made by a correct processor. Then at least t + 1 correct processors lock v at phase k. Moreover, each of the correct processors that locks v at phase k will, from that time onward, always have a lock on v with associated phase number at least k. PROOF. Since at least 2t + 1 processors send an acknowledgment that they locked v at phase k, it is clear that at least t + 1 correct processors lock v at phase k. The rest of the proof is similar to the proofs of Lemmas 3.2 and 3.5, using the Unforgeability property to argue that, if a correct processor q accepts a valid lock on value w # v with associated phase h, then w is found acceptable to all but at most t of the correct processors at the first round of phase h. 0 LEMMA 3.9. Immediately after any lock-release phase that occurs at or after GST, the set of values locked by correct processors contains at most one value. PROOF. Straightforward from the lock-release rule and the Relay property. Cl THEOREM 3.3. Assume the basic model with Byzantine faults without authen- tication. Assume N 2 3t + 1. Then Algorithm 3 achieves consistency, strong unanimity, and termination for an arbitrary value domain. PROOF. The proof is virtually identical to the proof of Theorem 3.2, using the Correctness and Relay properties after GST to argue termination. 0 An upper bound on the number of rounds required is GST + 6(N + 1). Remark 1. Algorithms l-3 have the property that all correct processors make a decision within O(N) rounds after GST. The time to reach agreement after GST can be improved to O(t) rounds by some simple modifications. The bound O(t) is optimal to within a constant factor, since t + 1 rounds are necessary even if communication and processors are both synchronous and failures are fail-stop [7, 91. A modification to all the algorithms is to have a processor repeatedly broadcast the message “Decide v” after it decides v. For Algorithm 1 (fail-stop and omission faults), a processor can decide v when it receives any “Decide v” message. For Algorithms 2 and 3 (Byzantine faults), a processor can decide v when it receives t + 1 “Decide v” messages from different sources. Easy arguments show that the modified algorithms are still correct and that all correct processors make a decision within O(t) rounds after GST; these arguments are left to the reader. 4. Partially Synchronous Communication and Synchronous Processors In this section we assume that processors are completely synchronous (a = 1) and communication is partially synchronous. We show how to use these models to simulate the basic model of Section 3.1 and thus to solve the same consensus problem.

Consensus in the Presence of Partial Synchrony 303 Since processors operate in lock-step synchrony, it is useful to imagine that each (correct) processor has a clock that is perfectly synchronized with the clocks of other correct processors. Initially, the clock is 0, and a processor increments its clock by 1 every time it takes a step. The assumption @ = 1 implies that the clocks of all correct processors are exactly the same at any real-time step. As presented in Section 2, there are two different definitions of partially syn- chronous communication: (1) delta is unknown, and (2) delta holds eventually. We consider these two cases separately. Section 4.1 describes the upper bound results for the model in which delta holds eventually. Section 4.2 describes the upper bound results for delta unknown. Finally, Section 4.3 contains the lower bound results. 4.1 UPPER BOUNDS WHEN DELTA HOLDS EVENTUALLY. We first consider the model in which delta holds eventually. Fix any of the four possible fault models. We show that, if there is a t-resilient consensus protocol in the basic model, then there is one in the model in which delta holds eventually. To see the implication, fix A and assume algorithm A works for the basic model. From A we define an algorithm A ’ for the model in which A holds eventually. Let R = N + A. Each processor divides its steps into groups of R, and uses each group to simulate its own actions in a single round of algorithm A. More specifically, the processor uses the first N steps of group r to send its round r messages to the N processors, sending to one processor at a time, and uses the last A steps to perform Receive operations. The state transition for round r is simulated at the last step of group r. (The number R is large enough to allow all processors to exchange messages within a single group of steps, once GST has been reached.) Each processor always attaches a round identifier (number) to messages, and any message sent during a round r that arrives late during some round r’ > r is ignored. Thus, communication during each round is independent of communication during any other round. For any run e’ of A ‘, it is easy to show that there exists a corresponding run e of A with the following properties: (1) All processors that are correct in e’ are also correct in e. (2) The types of faults exhibited by the faulty processors are the same in e’ as in e. (3) Every state transition of a correct processor in e is simulated by the correspon- ding correct processor in e’. Since algorithm A is assumed to be a t-resilient consensus protocol for the basic round model, consensus is eventually reached in e, and so in e’, as needed. By applying the transformation just described to Algorithms 1-3, we obtain Algorithms 1 l-3’, respectively. We immediately obtain the following result: THEOREM 4.1. Assume that processors are completely synchronous (@ = 1) and communication is partially synchronous (A holds eventually). (a) For the fail-stop or omission fault model, if N > 2t + 1, then Algorithm 1’ achieves consistency, strong unanimity, and termination for an arbitrary value domain. (6) For the authenticated Byzantine fault model, ifN z 3t + 1, then Algorithm 2’ achieves consistency, strong unanimity, and termination for an arbitrary value domain.

304 C. DWORK ET AL. (c) For the unauthenticated Byzantine fault model, if N 2 3t + 1, then Algo- rithm 3’ achieves consistency, strong unanimity, and termination for an arbitrary value domain. It is easy to see that Algorithms 1’ and 2’ guarantee that decisions are reached by all correct processors within time 4(N + l)(N + A) after GST. The corresponding bound for Algorithm 3’ is 6(N + l)(N + A). Thus, the time for Algorithms l’-3’ is bounded above by GST plus a polynomial in N and A. Remark 1 at the end of Section 3 shows how these time bounds can be improved. As mentioned in the Introduction, these bounds also give the time after GST when A can stop holding again. 4.2 UPPER BOUNDS FOR DELTA UNKNOWN. Now we consider the model in which delta is unknown. Fix any of the four possible fault models. We show that, if there is a t-resilient protocol in the basic model, then there is one in the model in which delta is unknown. Let algorithm A work for the basic model. As before, we define A ’ from A so that every execution of A ’ is a simulation of an execution ofA. Let R, = N + r. Each processor in A’ divides its steps into groups so that its rth group contains exactly R, steps. As before, the processor uses each group to simulate its own actions in a single round of algorithm A. Thus, the processor uses the first N steps of group r to send its round r messages to the N processors, one processor at a time, and uses the last r steps to perform Receive operations. The round r state transition is simulated at the last step of group r. Again, each processor always attaches a round identifier (number) to messages, and any message sent during a round r that arrives late during some round r’ > r is ignored. Now consider any run e’ of A ‘, and assume that the communication bound A holds in e’. As before, it is easy to define a corresponding run e of A. The number of steps in e’ that are allotted for the simulation of any round r 2 A is sufficient to allow all messages that are sent during round r to get received. Thus, e is an allowable run of A (with A as its GST round). Since A is assumed to be a t-resilient consensus protocol for the basic model, consensus is eventually reached in e, and so in e’, as needed. By applying this transformation to Algorithms l-3, we obtain Algorithms 12-32, respectively, and immediately obtain the following result: THEOREM 4.2. In the model in which processors are completely synchronous (a = 1) and communication is partially synchronous (delta is unknown), claims (a)-(c) of Theorem 4.1 hold for Algorithms 1 2-32, respectively. We now bound the time required by Algorithms 12-32. Consider Algorithm 12, for example, and fix any execution e with corresponding message bound A. Then round A is the GST for the execution of Algorithm 1 simulated by e. It requires at most time A(N + A) for processors to complete their simulations of the first A rounds of Algorithm 1 (A rounds, with N + A as the maximum time to simulate a single round). Then an additional 4(N + 1) rounds, at most, must be simulated. These additional rounds require at most time 4(N + l)(N + A + 4(N + l)), where the term (N + A + 4(N + 1)) represents the maximum time to simulate one of these rounds (the last and largest one). Thus the total time is bounded by A(N + A) + 4(N + l)(N + A + 4(N + I)), or O(N2 + A’). The same bound holds for Algorithm 22. The corresponding bound for Algorithm 32 is A(N + A) + 6(N + l)(N + A + 6(N + 1)). Thus the time for Algorithms 12-32 is bounded above

Consensus in the Presence of Partial Synchrony 305 by a polynomial in N and A. Again, these bounds can be improved using the ideas in Remark 1 at the end of Section 3. Remark 2. If we strengthen the model where delta holds eventually to require that no messages are ever lost, but that messages sent before GST can arrive late, then we can modify Algorithms l ’-3’ to allow processors to terminate. Specifically, we use the ideas described in Remark 1 at the end of Section 3. In the present case, however, each processor need only broadcast a single “Decide v” message, at the time when it decides v. This message is not tagged with a round number, and other processors should accept a “Decide v” message at any time. For fail-stop or omission faults, a processor can stop participating in the algorithm immediately after it broadcasts its “Decide v” message. Further, it can decide v immediately after receiving a “Decide v” message. For Byzantine faults, a processor can decide v after receiving t + 1 “Decide v” messages, but it cannot stop participating in the algorithm until after it has broadcast its “Decide v” message and received “Decide v” messages from a total of 2t + 1 processors. If messages can be lost before GST, it is not hard to argue that, in any consensus protocol resilient to one fail-stop fault, there is some execution in which at least one correct processor must continue sending messages forever. The argument is similar to those for Theorems 4.3 and 4.4 in the next subsection. All that is needed to ensure halting in practice, however, is that each correct processor be able to reliably deliver a “Decide v” message to every other correct processor; in the absence of network partition, this could be done by repeated sending. Remark 3. All the results of this section have assumed 9 = 1. If processors are synchronous with + > 1 and communication is partially synchronous, we would hope to obtain the same results. We show that this extension holds by proving a more general set of results: In Section 5 we show that the resiliency achieved by the protocols of this section can also be achieved if both processors and commu- nication are partially synchronous. These stronger results imply that the same resiliency is achievable if communication is partially synchronous and processors are synchronous with @ > 1. 4.3 LOWER BOUNDS. In this section we give our lower bound results for partially synchronous communication and completely synchronous processors. The first lower bound shows that the resiliency of Theorems 4.1 and 4.2, part (a), cannot be improved, even for weak unanimity and a binary value domain. THEOREM 4.3. Assume the model with fail-stop or omission faults, where the processors are synchronous and communication is partially synchronous (either delta holds eventually or delta is unknown). Assume 2 5 N I 2t. Then there is no t-resilient consensus protocol that achieves weak unanimity for binary values. PROOF. The proof is the same for both definitions of partially synchronous communication. Assume the contrary, that there is an algorithm immune to fail- stop faults satisfying the required properties. We shall derive a contradiction. Divide the processors into two groups, P and Q, each with at least 1 and at most t processors. First consider the following Scenario A: All initial values are 0, the processors in Q are initially dead, and all messages sent from processors in P to processors in P are delivered in exactly time 1. By t-resiliency, the processors in P must reach a decision; say that this occurs within time TA. The decision must be 0. For if it were 1, we could modify the scenario to one in which the processors in Q are alive but all messages sent from Q to P take more than time TA to be

C. DWORK ET AL. 306 delivered. In the modified scenario, the processors in P still decide 1, contradicting weak unanimity. Consider Scenario B: All initial values are 1, the processors in P are initially dead, and messages sent from Q to Q are delivered in exactly time 1. By a similar argument, the processors in Q decide 1 within TB steps for some finite TB. Consider Scenario C (for Contradiction): Processors in P have initial values 0, processors in Q have initial values 1, all processors are alive, messages sent from P to P or from Q to Q are delivered in exactly time 1, and messages sent from P to Q or from Q to P take more than max( TA, TB) steps to be delivered. The processors in group P (respectively, group Q) act exactly as they do in Scenario A (respectively, Scenario B). This yields a contradiction. Cl The following lower bound result again applies in the case of weak unanimity and a binary value domain. It shows that the resiliency of Theorems 4.1 and 4.2, part (b), cannot be improved, even for the case of weak unanimity and a binary value domain. THEOREM 4.4. Assume the model with Byzantine faults and authentication, in which the processors are synchronous and communication is partially synchronous (either delta holds eventually or delta is unknown). Assume 2 5 NI 3t. Then there is no t-resilient consensus protocol that achieves weak unanimity for binary values. PROOF. Again, the proof is the same for both definitions of partially synchron- ous communication. Assume the contrary. We shall derive a contradiction. If N = 2, then the theorem follows from the previous lower bound, Theo- rem 4.3. Assume then that N L 3. Divide the processors into three groups, P, Q, and R, each with at least 1 and at most t processors. First consider the following Scenario A: All initial values are 0, the processors in R are initially dead, and all messages sent from processors in P U Q to processors in P U Q are delivered in exactly time 1. By t-resiliency, the processors in P U Q must reach a decision; say that this occurs within time TA. As in the previous lower bound proof, the decision must be 0. Consider Scenario B: All initial values are I, the processors in P are initially dead, and messages sent from Q U R to Q U R are delivered in exactly time 1. By a similar argument, the processors in Q U R decide 1 within TB steps for some finite TB. Consider Scenario C: Processors in P have initial values 0, processors in R have initial values 1, and processors in Q are faulty. The processors in Q behave with respect to those in P exactly as they do in Scenario A, and with respect to those in R exactly as they do in Scenario B. The messages sent from P to P U Q and from R to R U Q are delivered in exactly time 1, but all messages from P to R or from R to P take more than max(TA, TB) steps to be delivered. The processors in group P (respectively, group R) act exactly as they do in Scenario A (respectively, Scenario B). This yields a contradiction. Cl The preceding lower bound is tight for the case of unauthenticated Byzantine faults (Theorems 4.1 and 4.2, part (c)). 5. Partially Synchronous Communication and Processors In this section we consider the case in which both communication and processors are partially synchronous. We show the existence of protocols with the same resiliencies as in the previous section, where only communication was partially

Consensus in the Presence of Partial Synchrony 307 synchronous. Moreover, the algorithms for corresponding cases still require amounts of time (specifically, polynomial) similar to the earlier case. Again, we proceed by showing how to use the models of this section to simulate the basic model of Section 3. In the previous section, the processors had a common notion of time that allowed time to be divided into rounds. In this case, where phi does not always hold or is unknown, no such common notion of time is available. Therefore, our first task is to describe protocols that give the processors some approximately common notion of time. We call such protocols distributed clocks. Our distributed clocks do not use explicit knowledge of A or a. They are designed to be used in either kind of partially synchronous model, delta and phi holding eventually or delta and phi unknown. However, the properties that the clocks exhibit do depend on the particular bounds A and 9 that hold (eventually) during the particular run. Each processor maintains a private (software) clock. The private clocks grow at a rate that is within some constant factor of real time and remain within a constant of each other. For the model with delta and phi unknown, these conditions hold at all times. For the GST model, however, these conditions are only guaranteed to hold after some constant amount of time after GST. The three “constants” here depend polynomially on N, +, and A. We have made no effort to optimize these constants, as this would obfuscate an already difficult and technical argument. In addition, the number of message bits sent by correct processors is polynomially bounded in N, A, a, and GST. Once we have defined the distributed clocks, the protocols of Section 3 are simulated by letting each processor use its private clock to determine which round it is in. Several “ticks” of each private clock are used for the simulation of each round in the basic model. In order to use a distributed clock in such simulations, we need to interleave the steps of the distributed-clock algorithm with steps belonging to the underlying algorithm being simulated. Moreover, the distributed- clock algorithm itself is conveniently described as interleaving Receive steps, which increase the recipient’s knowledge of other processors’ local clocks, with Send steps, which allow the sender to inform others about its local clock. To be specific, we assume that processors alternately execute a Receive operation for the clock, a Send operation for the clock, and a step of the algorithm being simulated. In this section we describe what happens during the clock maintenance steps for two different distributed clocks. The first, presented in Section 5.1, handles Byzantine faults without authentication and requires N 2 3t + 1. The second, presented in Section 5.2, handles Byzantine faults with authentication and requires N 2 2t + 1. This clock obviously handles fail-stop and omission faults as well. In Section 5.3 the upper bounds for the model in which delta and phi hold eventually are given. In Section 5.4 we present the upper bound results for the model in which delta and phi are unknown. We do not prove lower bounds in this section, since the lower bounds obtained in Section 4 apply to the current models. 5.1 A DISTRIBUTED CLOCK FOR BYZANTINE FAULTS WITHOUT AUTHENTICA- TION. Throughout this section we assume that N I 3t + 1. We again assume that . Processors participate in our distributed clock real times are numbered 0, 1,2, . . . protocols by sending ticks to one another. As an expositional convenience, we define a master clock whose value at any time s depends on the past global behavior of the system and is a function of the ticks that have been sent before s. Even approximating the value of the master clock requires global information about

C. DWORK ET AL. 308 what ticks have been sent to which processors. We therefore introduce a second type of message, called a claim, in which processors make assertions about the ticks they have sent. An i-tick is the message i. An i+-tick is a j-tick for any j 2 i. We say p has broadcast an i-tick if it has sent an i+-tick to all N processors. An i-claim is the message “I have broadcast an i-tick.” An P-claim is a j-claim for any j 2 i. We say p has broadcast an i-claim if it has sent an i’-claim to all N processors. We adopt the convention that all processors have exchanged ticks and claims of size 0 before time 0. These messages are not actually sent, but they are considered to have been sent and received. When we say that a certain event, such as the receipt of a certain message, has occurred “by time s,” we mean that the event has occurred at some real-time step 5s. The master clock, C: N + N, is defined at any real time s by C(s) = maximum j such that t + 1 correct processors have broadcast a j-tick by time s. Since all processors are assumed by convention to have broadcast a O-tick before time 0, C(0) = 0. Note that C(s) is a nondecreasing function of s. For each processor pi, the private clock, Ci: N + N, is defined by ci(S) = maximum j such that, by time s, pi has received either ( 1) messages from 2 t + 1 processors, where each message is a j+-claim, or (2) messages from t + 1 processors, where each message is either a (j + l)+-tick or a (j + I)+-claim. Since pi is assumed to have received O-claims from all N processors before time 0, Ci(0) = 0 for all correct pi. Note that ci(S) is nondecreasing for all correct pi. Let pi be a correct processor. In sending ticks, pi’s goal is to increment the master clock, so ideally we would like pi to send a (C(s) + l)-tick at time s. However, knowing C(s) requires global information. Instead, pi uses ci, its view of C, to compute its next tick, sending a (Ci(S) + l)-tick at time s. We show in Lemma 5.1 that Ci(S) 5 C(s), so pi will never force the master clock to skip a value. We also show that, “soon” after GST for the GST model, the value of the master clock exceeds those of the private clocks by at most a constant amount, so that pi will not be pushing the master clock far ahead of the private clocks of the other processors. Each processor pi repeatedly cycles through all N processors, broadcasting, in different cycles, either ticks or claims. The private clock of pi is stored in a local variable Ci. Processor pi updates its private clock every time it executes a Receive operation in the clock protocol by considering all the ticks and claims it has received and updating its private clock according to the definition of the private clock given above (thus the private clock is updated every second clock step, i.e., every third step, that pi takes). The following two programs describe how ticks and claims are sent during the sending steps of the clock protocol. A processor begins the distributed clock protocol by setting Ci to 0 and calling TICK(O), where TICK(b) is the protocol shown in Figure 2. Note that the value of Ci may change during an execution of TICK(b), but only a (b + I)-claim (rather than a (ci + I)-claim) is sent during execution of CLAIM(b). This is consistent with our definition of what it means to have broadcast a (b + I)-tick.

Consensus in the Presence of Partial Synchrony 309 TICK(b): forj=l,...,Ndo send (Q + l)-tick to pj; CLAIM(b). FIG. 2. The TICK and CLAIM procedures. CLAIM(b): forj= l,...,Ndo send (b + I)-claim to pi; if c, > b then TICK(G) else CLAIM(b). The following lemmas describe limitations on the rates of the master clock and the local clocks. The first three lemmas do not involve A and +‘, and so apply to either partially synchronous model (delta and phi holding eventually or delta and phi unknown). LEMMA 5.1. For all s L 0 andfor all i such that pi is correct, Ci(S) s C(s). PROOF. The proof is by induction on s. The basis s = 0 is obvious since Ci(0) = C(0) = 0 by definition. Fix some s and some correct pi, and assume that the statement of the lemma is true for all s’ < s and all correct pk. Let j = Ci(S). By the definition of the private clock, there are two possibilities: (1) pi has received j+-claims from 2t + 1 different processors. Since at least t + 1 of these j’-claims are from correct processors, C(s) 2 j by definition of the master clock. (2) pi has received messages from t + 1 different processors, each of which is either (j + I)+-tick or a (j + I)+-claim. Consider the earliest real time, s’, when some correct processor, say pk, sends a (j + I)+-tick. Note that s’ < s, so ck(s’) 5 C(s’) by the inductive hypothesis. By definition of the protocol, c&‘) 2 j. Therefore, j 5 f&(d) 5 c(s’) 5 c(s). 0 LEMMA 5.2. For all s 2 0, the largest tick sent by a correct processor at real time s has size at most C(s) + 1. PROOF. This proof is immediate from the protocol and Lemma 5.1. Cl LEMMA 5.3. For all s, x 2 0, C(s + x) I C(s) + x. PROOF. The proof is by induction on x. For the basis, let x = 1. By Lemma 5.2 the largest tick sent by a correct processor by time s has size at most C(s) + 1, so the maximum tick that can be broadcast by t + 1 processors by time s + 1 is a (C(s) + I)-tick. Thus, C(s + 1) 5 C(s) + 1. Assume the lemma holds for some x. Then C(s + (x + 1)) = C((s + 1) + x) I C(s + 1) + x (by the induction hypothesis) sC(s)+(x+ 1) (by the basis). 0 The preceding lemmas are independent of both communication and processor synchrony. Now we give several lemmas that assume such synchrony. We would like to state the lemmas in a way that applies to both kinds of partially synchronous models (delta and phi holding eventually and delta and phi unknown). So fix A and + (for either case). Also fix GST for the model in which A and cf, hold

C. DWORK ET AL. 310 eventually. For the model in which delta and phi are unknown, define GST = 0, for uniformity. The next few lemmas discuss the behavior of the clocks a short time after GST. Lemma 5.4 says that the private clocks increase at most a constant factor more slowly than real time. Lemmas 5.5 and 5.6 are technical lemmas used to prove the following lemma. Lemma 5.7 has two parts: The first says that, at any particular real time, the master clock exceeds the value of the private clocks by at most an additive constant. The second part of Lemma 5.7 says that the master clock runs at a rate at most a constant factor slower than real time. Let D = A + 3+. Note that, if a message is sent to a correct processor p at time s 2 GST, then p will receive the message by time s + D: The message will be delivered by time s + A, and within an additional time 3@, p will execute a Receive operation in the clock protocol. LEMMA 5.4. Assume s 1 GST, and let s’ = s + 12N+ + D. Let j be such that Ci(S) 2 j for all correct pi. Then Ci(S’) 2 j + 1 for all correct pi. PROOF. At time s, pi could be executing TICK(b) for some b < j. However, within time 6N9 after s, pi will call TICK(b’) or CLAIM(b’) for some b’ % j, and within an additional 6N@ steps, pi will broadcast a (j + l)-claim. Therefore, every correct processor will broadcast a (j + I)-claim by time s + 12N@. By time s’, each correct pi will receive at least 2t + l(j + l)+-claims, so Ci(S’) I j + 1. Cl LEMMA 5.5. Assume s L GST, and let s’ = s + 39N@ + 40. Then C(s’) 2 C(s) + 2. PROOF. Let j = C(s). By definition of the master clock, t + 1 correct processors have broadcast a j-tick by time s. These t + 1 processors send a tick or claim of size at least j to every processor within the first 3N@ steps after time s. Since these messages are sent after GST, they are received within D steps, so ci(S + 3N+ + D) z j - 1 for all correct pi. By three applications of Lemma 5.4, Ci(S’) 2 j + 2. So C(s’)rj+2byLemma5.1. Cl LEMMA 5.6. Let so be the minimum time such that C(so) r C(GST) + 2. (Time so exists by Lemma 5.5.) Let s 2 so + D. Then ci(s) 1 C(s - D) - 1 for all correct pi. PROOF. Let j = C(s - D). Then t + 1 correct processors broadcast a j-tick by s - D. By Lemma 5.2, the largest tick sent by a correct processor by GST is a (C(GST) + 1 )-tick. Since j 2 C(GST) + 2, the j-ticks from correct processors are broadcast entirely after GST, so they are received by time s. Thus, for all correct pi, Ci(S) 2 j - 1. Cl LEMMA 5.7. Let so be the minimum time such that C(so) I C(GST) + 2. (a) For all s L so + D andfor all correct processors pi, ci(s) > C(s) - D - 1. (b) For all s L so andfor s’ = s + 24N@ + 30, C(s’) 2 C(s) + 1. PROOF (a) Lemma 5.6 implies ci(s) > C(s - D) - 1. By Lemma 5.3, C(s) 5 C(s - D) + D I Ci(s) + 1 + D. Thus, Ci(S) 2 C(S) - D - 1. (b) Let x = s + D. Lemma 5.6 implies Ci(X) 2 C(S) - 1 for all correct pi. By two applications of Lemma 5.4, Ci(S’) L C(s) + 1. So C(s’) I C(s) + 1 by Lemma 5.1. 0